LoL: Pro AI Predicted Champion Tier List - Patch 12.11 Results

If you aren’t sure what the AI Champion Tier List Challenge is, then I suggest checking out the article I wrote to introduce it. If you have an aversion to pressing links, then here’s the tl;dr:

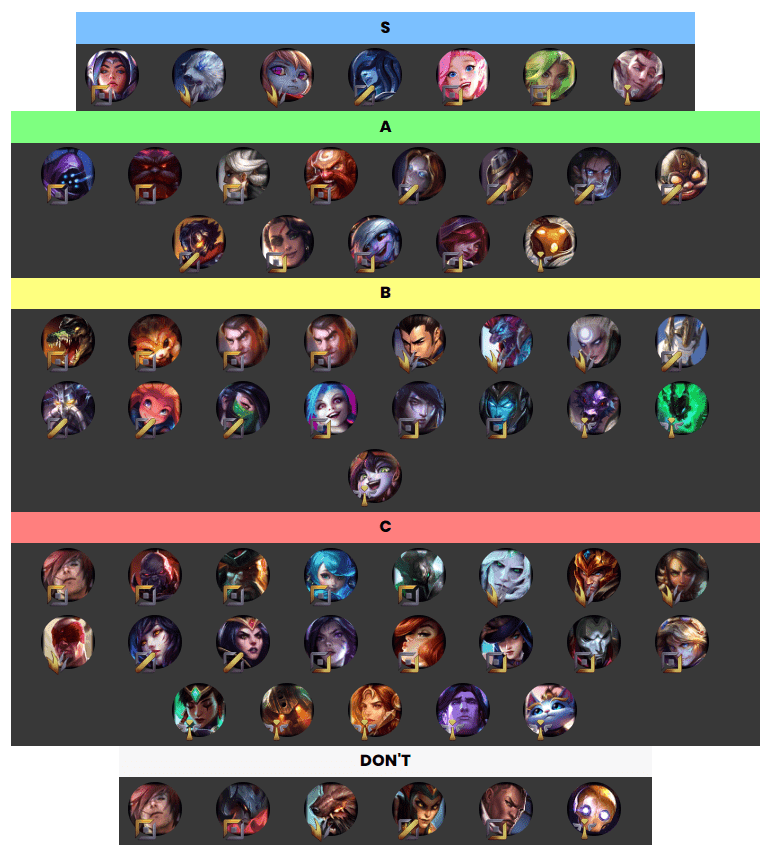

"Every patch we’ll use the iTero AI to rank each Champion into a tier-list for pro play. Once all the major regions have finished playing on said patch, we’ll compare the predictions to the actual results. The challenge is for by the end of the season for every S-tier Champion to have performed better than an A-tier, and A-tier better than B-tier and so on."

The Results

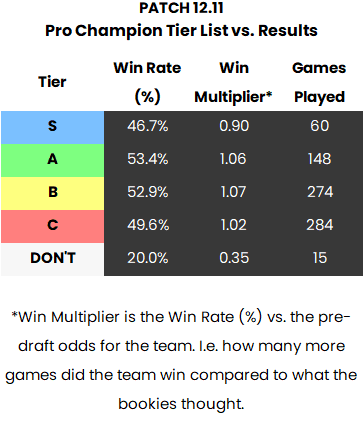

Without further ado, here are the summary results from patch 12.11 (the original tier-list is found further down):

iTero Gaming AI Predicted Pro Champion Tier List for Patch 12.11: results

First, let’s get comfortable with what we’re seeing.

All these results are from Patch 12.11 (obviously) and include every** game from the four major region (LEC, LCS, LCK, LPL).

**I’m aware of some on-going data issues where 1 or 2 games occasionally go missing from the patch, I’ll do my best to prevent this!

As an example, the AI ranked Aphelios as a B-tier pick. Therefore, all his games on the patch (32) are part of the 274 games played on B-tier picks. Similarly, his win rate (53.1%) contributes to the average win rate for the tier of 52.9%.

Therefore, if the AI has done well then all the strong Champions for the patch would have been put in A-tier or above and will push the average win rate up above the rest of the other, lower tiers.

The Win Rate Multiplier*

This is a little tricky to explain so bare with me, it’s important we get this right.

In essence, the Win Rate Multiplier (WRM) is saying “How many MORE games did teams that pick this Champion win compared to what they were EXPECTED to win”.

The “expected to win” part is calculated by using the average odds from the major betting providers. For instance, when G2 Esports played Team BDS, the average bookie gave BDS a 14% chance to win the game.

To help, let us imagine that G2 Esports played Team BDS 100 times. You would expect BDS to win 14 of these.

If BDS played Aphelios every game and won 28 games, then Aphelios has a 28% win rate.

However, since they were only forecast to win 14 games they’ve actually won twice as many games then they were expected to win!

So, Aphelios is given a WRM of 2.0x (28 / 14), which is very good — even though his win rate is only 28%.

If they had won 14 games then his WRM is 1.0x (14 / 14), which means they won exactly as many games as expected. If instead they had won 7 games it would have been 0.5x (7 / 14), i.e. half as many wins.

The reason we use this is because there could be a Champion that all the best teams are picking. Because it’s being played only by the best teams, the win rate is inflated not by the performance of the Champion but by the strength of the team! This also could happen vice-versa if lots of bad teams played a Champion, and everything in-between.

A Brief Summary

The results overall are actually very promising for a first attempt. Remember, what we want is for every tiers performance to be better than the one below it.

So, A-tier has 53.4%, B-tier has 52.9%, C-tier has 48.6% and D-tier has a measly 20%. Not too shabby.

The only outlier was S-tier where the win rate was 46.7% for the Champions that should have been our strongest performers.

Champion Breakdown

Let us now take a look in more detail at each tier.

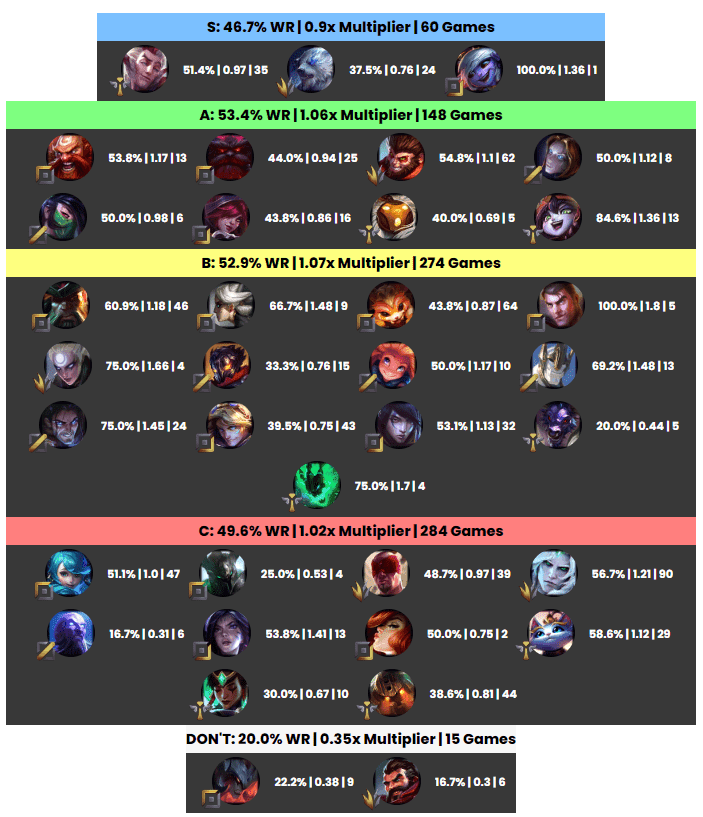

All the results in the format of:

iTero Gaming AI Predicted Pro Champion Tier List for Patch 12.11: Champion Breakdown

iTero Gaming AI Predicted Pro Champion Tier List for Patch 12.11: Results by Champion

NOTE: those who went back to check the previous article may have noticed that Yuumi was accidentally put in D-tier AND C-tier in the original tier-list, teething issues!

The S-tier was completely bombed-out by the Volibear jungle. With 24 games played and a shocking 37.5%. He was one of the single worst performers of the patch.

On the flip side in C-tier: Viego and Yuumi both rocked above their station with 56.7% and 58.6% win rates respectively.

Also, note how Viego has a lower win rate but a higher WRM than Yuumi. This means that more of the feline’s wins were because of better teams and so this has inflated her win rate slightly.

For future predictions I’ve increased the number of Champions in each tier so next time the few poor predictions won’t have as much of an impact.

The “DON’T” tier certainly came in as expected, with Champions from here only winning 3 games of the 15 played. The multiplier is also equally low and so we know that it isn’t being unfairly pulled down by bad teams.

So what now?

Did you know “itero” means to iterate in Latin?

That’s exactly what we’re going to do.

We’ll study the AI and work out why it loved Volibear and hated Yuumi, then we test any rules, logic or data we can include to help it better evaluate these Champions for next time. Then we’ll do the same in Patch 12.12 and on, and on…

We’ll also track the accumulated performance of each tier by adding each patches results to the previous. This will be how we measure the success of the challenge.

All the results will be available at https://itero.gg/esports/tier-list-results and if you’re interested, here is the iTero AI Pro Champion Tier List for Patch 12.12: